DE

DE- Industries

- Finance

Nearshore software development for finance—secure, scalable, and compliant solutions for banking, payments, and APIs.

- Retail

Retail software development services—e-commerce, POS, logistics, and AI-driven personalization from nearshore engineering teams.

- Manufacturing

Nearshore manufacturing software development—ERP systems, IoT platforms, and automation tools to optimize industrial operations.

- Finance

- What we do

- Services

- Software modernization services

- Cloud solutions

- AI – Artificial intelligence

- Idea validation & Product development services

- Digital solutions

- Integration for digital ecosystems

- A11y – Accessibility

- QA – Test development

- Technologies

- Front-end

- Back-end

- DevOps & CI/CD

- Cloud

- Mobile

- Collaboration models

- Collaboration models

Explore collaboration models customized to your specific needs: Complete nearshoring teams, Local heroes from partners with the nearshoring team, or Mixed tech teams with partners.

- Way of work

Through close collaboration with your business, we create customized solutions aligned with your specific requirements, resulting in sustainable outcomes.

- Collaboration models

- Services

- About Us

- Who we are

We are a full-service nearshoring provider for digital software products, uniquely positioned as a high-quality partner with native-speaking local experts, perfectly aligned with your business needs.

- Meet our team

ProductDock’s experienced team proficient in modern technologies and tools, boasts 15 years of successful projects, collaborating with prominent companies.

- Why nearshoring

Elevate your business efficiently with our premium full-service software development services that blend nearshore and local expertise to support you throughout your digital product journey.

- Who we are

- Our work

- Career

- Life at ProductDock

We’re all about fostering teamwork, creativity, and empowerment within our team of over 120 incredibly talented experts in modern technologies.

- Open positions

Do you enjoy working on exciting projects and feel rewarded when those efforts are successful? If so, we’d like you to join our team.

- Hiring guide

How we choose our crew members? We think of you as a member of our crew. We are happy to share our process with you!

- Rookie boot camp internship

Start your IT journey with Rookie boot camp, our paid internship program where students and graduates build skills, gain confidence, and get real-world experience.

- Life at ProductDock

- Newsroom

- News

Stay engaged with our most recent updates and releases, ensuring you are always up-to-date with the latest developments in the dynamic world of ProductDock.

- Events

Expand your expertise through networking with like-minded individuals and engaging in knowledge-sharing sessions at our upcoming events.

- News

- Blog

- Get in touch

16. Oct 2025 •4 minutes read

Cloud migration with Spring Boot: GCP Pub/Sub to Azure Service Bus

Jovica Zorić

Chief Technology Officer

Cloud migration isn’t just a technical project, it’s a business-critical decision driven by changing needs around cost optimization, regulatory compliance, M&A activity, or to maintain negotiating leverage with cloud vendors. However, once in the cloud, cloud-native applications using managed services create natural lock-in requiring teams to navigate unique APIs, operating models, and performance trade-offs for the chosen cloud platform.

In this article, we’ll walk through a migration approach for a Spring Boot based messaging service from Google Cloud Platform (GCP) to Microsoft Azure ( Azure ), all while keeping business logic intact and minimizing disruption for developers.

Overview

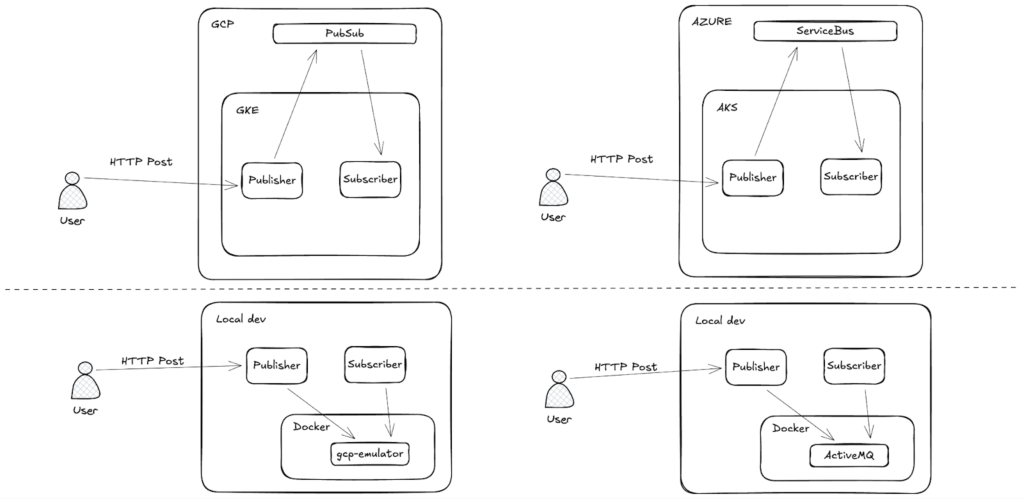

Our reference architecture follows a typical event-driven microservices pattern:

- Publisher service: Receives incoming HTTP requests and publishes events to a message broker

- Subscriber service: Consumes messages from the broker and processes them

On GCP, these applications run inside Google Kubernetes Engine (GKE) and rely on Pub/Sub for messaging. The target on Azure is Azure Kubernetes Service (AKS) with Service Bus providing the messaging layer. As we rely on Kubernetes to provide a portable runtime abstraction we will focus our migration efforts on the messaging layer rather than the entire application stack.

We also can’t overlook our local development setup. To be able to test it locally, we use Docker to simulate cloud services, running Pub/Sub Emulator for GCP and ActiveMQ to replicate Azure’s JMS integration. Here is an overview diagram for reference.

Migration strategy

Keeping the application’s core logic unchanged, our cloud-native migration strategy focuses on three important layers:

- Infrastructure: Moving workloads from GKE to AKS and migrating messaging from Pub/Sub to Service Bus.

- Application Configuration: Ensuring that both Publisher and Subscriber can connect to the correct messaging backend while avoiding rewrites of business logic.

- Developer Workflow: Keeping local testing consistent with cloud deployments to minimize friction and maintain developer productivity.

To support these layers, we leverage three complementary abstractions:

- Kubernetes provides a consistent runtime across both GCP and Azure.

- Spring Profiles enables configuration switching without modifying core logic.

- Terraform ensures infrastructure reproducibility.

Implementation details

Infrastructure as Code

It’s been said many times, but it’s always worth repeating: reliable migrations can’t be built on manual infrastructure setup, often called ClickOps. Every mouse click is an undocumented decision, a potential inconsistency, and a future failure point. Infrastructure as Code (IaC) replaces this fragility, the manual configurations, and brings order, where:

- Changes are documented and auditable through version control;

- Teams can collaborate without conflicting manual setups;

- Infrastructure can be reused across environments;

- Deployments are automated and repeatable;

- Scaling and evolving the infrastructure becomes much easier.

GCP infrastructure

We begin on GCP with a minimal Terraform example that provisions a GKE cluster and Pub/Sub resources.

resource "google_container_cluster" "primary" {

name = "${var.project}-gke"

location = var.region

deletion_protection = false

remove_default_node_pool = true

initial_node_count = 1

network = google_compute_network.vpc.name

subnetwork = google_compute_subnetwork.subnet.name

}

resource "google_container_node_pool" "primary_nodes" {

name = google_container_cluster.primary.name

location = var.region

cluster = google_container_cluster.primary.name

node_count = var.gke_num_nodes

autoscaling {

min_node_count = var.general_purpose_min_node_count

max_node_count = var.general_purpose_max_node_count

}

node_config {

oauth_scopes = [

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

"https://www.googleapis.com/auth/devstorage.read_only",

"https://www.googleapis.com/auth/pubsub"

]

labels = {

env = var.project

}

machine_type = var.general_purpose_machine_type

tags = ["gke-node", "${var.project}-gke"]

metadata = {

disable-legacy-endpoints = "true"

}

}

}

resource "google_pubsub_topic" "articles" {

name = "articles"

labels = {

env = var.project

}

}

resource "google_pubsub_subscription" "articles_events" {

name = "articles-events"

topic = google_pubsub_topic.articles.id

labels = {

env = var.project

}

}

Azure infrastructure

The Azure equivalent maintains structural parity while accounting for platform differences:

resource "azurerm_servicebus_namespace" "main" {

location = azurerm_resource_group.rg.location

name = var.servicebus_namespace_name

resource_group_name = azurerm_resource_group.rg.name

sku = var.servicebus_sku

tags = var.tags

}

resource "azurerm_servicebus_topic" "main" {

name = var.servicebus_topic_name

namespace_id = azurerm_servicebus_namespace.main.id

}

resource "azurerm_servicebus_subscription" "main" {

name = var.servicebus_subscription_name

topic_id = azurerm_servicebus_topic.main.id

max_delivery_count = 1

}

resource "azurerm_user_assigned_identity" "aks" {

location = azurerm_resource_group.rg.location

name = "${var.cluster_name}-identity"

resource_group_name = azurerm_resource_group.rg.name

}

resource "azurerm_kubernetes_cluster" "main" {

location = azurerm_resource_group.rg.location

name = var.cluster_name

resource_group_name = azurerm_resource_group.rg.name

dns_prefix = var.cluster_name

default_node_pool {

name = "default"

vm_size = var.node_vm_size

node_count = var.node_count

upgrade_settings {

drain_timeout_in_minutes = 0

max_surge = "10%"

node_soak_duration_in_minutes = 0

}

}

identity {

type = "UserAssigned"

identity_ids = [azurerm_user_assigned_identity.aks.id]

}

tags = var.tags

}

resource "azurerm_role_assignment" "acr_pull" {

principal_id = azurerm_kubernetes_cluster.main.kubelet_identity[0].object_id

role_definition_name = "AcrPull"

scope = azurerm_container_registry.main.id

skip_service_principal_aad_check = true

}

Application layer: Spring boot

The strength of Spring’s profile system lies in its ability to manage environment-specific configurations with ease. By organizing cloud-specific settings into separate profiles, it maintains a clear separation of concerns.

Kubernetes deployment configuration

Before we move to application configuration, let’s write a simple Kubernetes deployment manifest to deploy our services. It uses environment variables, among others, to activate the appropriate Spring profile.

Publisher service example:

apiVersion: apps/v1

kind: Deployment

metadata:

name: publisher-deployment

spec:

selector:

matchLabels:

app: publisher

replicas: 1

template:

metadata:

labels:

app: publisher

spec:

containers:

- name: publisher

image: "<hub>/publisher:1"

ports:

- containerPort: 8080

env:

- name: SPRING_PROFILES_ACTIVE

value: gcp

....Core setup (platform agnostic)

Publisher

The Publisher is implemented as a Spring Boot application that exposes an HTTP endpoint and pushes events into Pub/Sub using Spring Integration.

public record Event(String id, String name) {}

@MessagingGateway

public interface IntegrationGateway {

@Gateway(requestChannel = "articlesMessageChannel")

void send(Object message);

}

@RestController

public class PublisherAPI {

private static final Logger LOGGER = LoggerFactory.getLogger(PublisherAPI.class);

final IntegrationGateway integrationGateway;

public PublisherAPI(IntegrationGateway integrationGateway) {

this.integrationGateway = integrationGateway;

}

@PostMapping("/send")

public void send(@RequestBody Event message) {

LOGGER.info(message.toString());

integrationGateway.send(message);

}

}

. . . . .

Subscriber

The Subscriber is a Spring Boot application that consumes events from Pub/Sub and handles them via defined business logic.

public record Event(String id, String name) {}

.....

GCP specific configuration

When running on GCP (activated via gcp or dev-gcp profile):

Publisher configuration:

@Configuration

@Profile({"dev-gcp", "gcp"})

public class PubSubPublisherConfiguration {

@Bean

public MessageChannel articlesMessageChannel() {

return new PublishSubscribeChannel();

}

@Bean

public PubSubMessageConverter messageConverter() {

return new JacksonPubSubMessageConverter(new ObjectMapper());

}

@Bean

@ServiceActivator(inputChannel = "articlesMessageChannel")

public PubSubMessageHandler articlesOutboundAdapter(PubSubTemplate pubSubTemplate, PublisherProperties publisherProperties) {

return new PubSubMessageHandler(pubSubTemplate, publisherProperties.getArticlesTopic());

}

}

Subscriber configuration

@Configuration

@Profile({"dev-gcp", "gcp"})

public class PubSubSubscriberConfiguration {

private static final Logger LOGGER = LoggerFactory.getLogger(PubSubSubscriberConfiguration.class);

@Bean

public MessageChannel articlesMessageChannel() {

return new PublishSubscribeChannel();

}

@Bean

public PubSubMessageConverter messageConverter() {

return new JacksonPubSubMessageConverter(new ObjectMapper());

}

@Bean

public PubSubInboundChannelAdapter listingChannelAdapter(@Qualifier("articlesMessageChannel") MessageChannel inputChannel,PubSubTemplate pubSubTemplate, SubscriberProperties properties) {

var adapter = new PubSubInboundChannelAdapter(pubSubTemplate, properties.getArticlesSubscription());

adapter.setOutputChannel(inputChannel);

adapter.setAckMode(AckMode.MANUAL);

adapter.setPayloadType(Event.class);

return adapter;

}

@ServiceActivator(inputChannel = "articlesMessageChannel")

public void consume(@Payload Event payload,

@Header(GcpPubSubHeaders.ORIGINAL_MESSAGE) BasicAcknowledgeablePubsubMessage message) {

LOGGER.info(payload.toString());

message.ack();

}

}

Azure specific configuration

When running on Azure (activated via azure or dev-azure profile):

Publisher configuration

@Configuration

@Profile({"dev-azure", "azure"})

public class JMSPublisherConfiguration {

@Bean

public MessageChannel articlesMessageChannel() {

return new PublishSubscribeChannel();

}

@Bean

@ServiceActivator(inputChannel = "articlesMessageChannel")

public MessageHandler jmsMessageHandler(JmsTemplate jmsTemplate, PublisherProperties publisherProperties) {

return message -> {

jmsTemplate.convertAndSend(publisherProperties.getArticlesTopic(), message.getPayload());

};

}

@Bean

public JmsTemplate jmsTemplate(ConnectionFactory connectionFactory, ObjectMapper objectMapper,

ObservationRegistry observationRegistry) {

JmsTemplate jmsTemplate = new JmsTemplate();

jmsTemplate.setConnectionFactory(connectionFactory);

jmsTemplate.setMessageConverter(new JMSMessageConverter<>(objectMapper, Event.class));

jmsTemplate.setMessageIdEnabled(true);

jmsTemplate.setPubSubDomain(true);

jmsTemplate.setObservationRegistry(observationRegistry);

return jmsTemplate;

}

}

Subscriber configuration

@Configuration

@Profile({"dev-azure", "azure"})

public class JMSSubscriberConfiguration {

@Bean

public DefaultJmsListenerContainerFactory jmsArticlesListenerContainerFactory(ConnectionFactory connectionFactory,

ObjectMapper objectMapper, ObservationRegistry observationRegistry) {

DefaultJmsListenerContainerFactory factory = new DefaultJmsListenerContainerFactory();

factory.setConnectionFactory(connectionFactory);

factory.setMessageConverter(new JMSMessageConverter<>(objectMapper, Event.class));

factory.setSessionTransacted(true);

factory.setPubSubDomain(true);

factory.setObservationRegistry(observationRegistry);

return factory;

}

}

Developer experience and Local development

A migration strategy that ignores developer experience, including local cloud migration testing with Spring Boot, is doomed to fail. For our use case, engineers need to test changes locally without provisioning cloud resources. Our solution provides platform-specific emulators that maintain API compatibility. We use docker to spin up “emulator” containers depending on profile.

For GCP

docker run -it -p 8085:8085 google/cloud-sdk:530.0.0-emulators gcloud beta emulators pubsub start --host-port=0.0.0.0:8085For Azure

Use ActiveMQ as a JMS provider that mimics Service Bus behavior locally.

docker run -d --name activemq -p 8161:8161 -p 61616:61616 -e 'ACTIVEMQ_OPTS=-Djetty.host=0.0.0.0' apache/activemq-classic:latest

In the end

Cloud migrations don’t need to be lengthy, multi-quarter projects that delay feature delivery and frustrate engineering teams. By using the right abstractions, as in our use case with Kubernetes for runtime portability, Spring Profiles for configuration management, and Infrastructure as Code for reproducibility, you can carry out migrations efficiently and with minimal disruption.

More importantly, this isn’t just about moving from one cloud to another. It’s about designing adaptable systems that evolve alongside changing business priorities, whether driven by cost optimization, regulatory compliance, or strategic shifts. In our Spring Boot cloud migration from GCP Pub/Sub to Azure Service Bus, we showed how using Kubernetes, Spring Profiles, and Terraform simplifies the process, minimizes disruption, and ensures consistent behavior across environments. The real power of a well-executed migration lies in its ability to transform both technology and teams. It creates a resilient infrastructure while fostering a culture of ownership and collaboration, ensuring that your business can adapt and thrive no matter what the future holds. If you’re exploring industry-specific cases, check out our work in manufacturing software development.

Tags:Skip tags

Jovica Zorić

Chief Technology OfficerJovica is a techie with more than ten years of experience. His job has evolved throughout the years, leading to his present position as the CTO at ProductDock. He will help you to choose the right technology for your business ideas and focus on the outcomes.